banditpylib.learners.mab_fcbai_learner¶

Classes¶

MABFixedConfidenceBAILearner: Base class for bai learners in the ordinary multi-armed banditLilUCBHeuristic: LilUCB heuristic policy [JMNB14]

- class banditpylib.learners.mab_fcbai_learner.MABFixedConfidenceBAILearner(arm_num: int, confidence: float, name: Optional[str])[source]¶

Base class for bai learners in the ordinary multi-armed bandit

This learner aims to identify the best arm with fixed confidence.

- Parameters

arm_num (int) – number of arms

confidence (float) – confidence level. It should be within (0, 1). The algorithm should output the best arm with probability at least this value.

name (str) – alias name

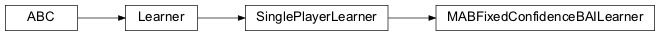

Inheritance

- property arm_num: int¶

Number of arms

- abstract property best_arm: int¶

Index of the best arm identified by the learner

- property confidence: float¶

Confidence level of the learner

- property goal: banditpylib.learners.utils.Goal¶

Goal of the learner

- property running_environment: Union[type, List[type]]¶

Type of bandit environment the learner plays with

- class banditpylib.learners.mab_fcbai_learner.ExpGap(arm_num: int, confidence: float, threshold: int = 2, name: Optional[str] = None)[source]¶

Exponential-gap elimination policy [KKS13]

- Parameters

arm_num (int) – number of arms

confidence confidence (float) – confidence level. It should be within (0, 1). The algorithm should output the best arm with probability at least this value.

threshold (int) – do uniform sampling when the active arms are no greater than the threshold within median elimination

name (Optional[str]) – alias name

Inheritance

- actions(context: data_pb2.Context) → data_pb2.Actions[source]¶

Actions of the learner

- Parameters

context – contextual information about the bandit environment

- Returns

actions to take

- property best_arm: int¶

Index of the best arm identified by the learner

- reset()[source]¶

Reset the learner

Warning

This function should be called before the start of the game.

- property stage: str¶

Stage of the learner

- class banditpylib.learners.mab_fcbai_learner.LilUCBHeuristic(arm_num: int, confidence: float, name: Optional[str] = None)[source]¶

LilUCB heuristic policy [JMNB14]

- Parameters

arm_num (int) – number of arms

confidence (float) – confidence level. It should be within (0, 1). The algorithm should output the best arm with probability at least this value.

name (Optional[str]) – alias name

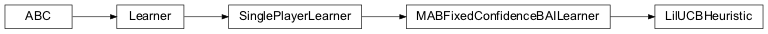

Inheritance

- actions(context: data_pb2.Context) → data_pb2.Actions[source]¶

Actions of the learner

- Parameters

context – contextual information about the bandit environment

- Returns

actions to take

- property best_arm: int¶

Index of the best arm identified by the learner