banditpylib.learners.mab_learner¶

Policies for ordinary multi-armed bandit with goal regret minimization.

We introduce notations in the following.

\(T\) |

game horizon |

\(N\) |

total number of arms |

\(i_t\) |

arm pulled at time \(t\) |

\(X_i^t\) |

empirical reward of arm \(i\) at time \(t\)

if arm \(i\) is pulled

|

\(T_i(t)\) |

number of times arm \(i\) is played before time \(t\) |

\(\bar{\mu}_i(t)\) |

empirical mean of arm \(i\) before time \(t\) |

\(\bar{V}_i(t)\) |

empirical variance of arm \(i\) before time \(t\) |

Classes¶

MABLearner: Abstract class for learners playing with the ordinary multi-armed banditEpsGreedy: Epsilon-Greedy policyThompsonSampling: Thompson Sampling policy [AG17]Uniform: Uniform policyUCBV: UCBV policy [AMSzepesvari09]EXP3: EXP3 policy [ACesaBianchiFS02]ExploreThenCommit: Explore-Then-Commit policySoftmax: Softmax policy

- class banditpylib.learners.mab_learner.MABLearner(arm_num: int, name: Optional[str])[source]¶

Abstract class for learners playing with the ordinary multi-armed bandit

This type of learners aim to maximize the total collected rewards.

- Parameters

arm_num (int) – number of arms

name (Optional[str]) – alias name

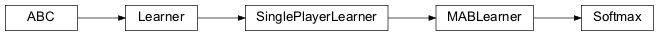

Inheritance

- property arm_num: int¶

Number of arms

- property goal: banditpylib.learners.utils.Goal¶

Goal of the learner

- property running_environment: Union[type, List[type]]¶

Type of bandit environment the learner plays with

- class banditpylib.learners.mab_learner.EpsGreedy(arm_num: int, eps: float = 1.0, name: Optional[str] = None)[source]¶

Epsilon-Greedy policy

With probability \(\frac{\epsilon}{t}\) do uniform sampling and with the remaining probability play the arm with the maximum empirical mean.

- Parameters

arm_num (int) – number of arms

eps (float) – epsilon

name (Optional[str]) – alias name

Inheritance

- actions(context: data_pb2.Context) → data_pb2.Actions[source]¶

Actions of the learner

- Parameters

context – contextual information about the bandit environment

- Returns

actions to take

- class banditpylib.learners.mab_learner.UCB(arm_num: int, alpha: float = 2.0, name: Optional[str] = None)[source]¶

Upper Confidence Bound policy [ACBF02]

At time \(t\), play arm

\[\mathrm{argmax}_{i \in \{0, \dots, N-1\}} \left\{ \bar{\mu}_i(t) + \sqrt{ \frac{\alpha \ln(t) }{T_i(t)} } \right\}\]- Parameters

arm_num (int) – number of arms

alpha (float) – alpha

name (Optional[str]) – alias name

Inheritance

- actions(context: data_pb2.Context) → data_pb2.Actions[source]¶

Actions of the learner

- Parameters

context – contextual information about the bandit environment

- Returns

actions to take

- class banditpylib.learners.mab_learner.ThompsonSampling(arm_num: int, prior_dist: str = 'beta', name: Optional[str] = None)[source]¶

Thompson Sampling policy [AG17]

Assume a prior distribution for every arm. At time \(t\), sample a virtual mean reward from the posterior distribution for every arm. Play the arm with the maximum sampled virtual mean reward.

- Parameters

arm_num (int) – number of arms

prior_dist (str) – prior distribution of thompson sampling. Only two priors are supported i.e., beta and gaussian

name (Optional[str]) – alias name

Warning

Reward should be Bernoulli when Beta prior is chosen.

Inheritance

- actions(context: data_pb2.Context) → data_pb2.Actions[source]¶

Actions of the learner

- Parameters

context – contextual information about the bandit environment

- Returns

actions to take

- class banditpylib.learners.mab_learner.Uniform(arm_num: int, name: Optional[str] = None)[source]¶

Uniform policy

Play each arm in a round-robin way.

- Parameters

arm_num (int) – number of arms

name (Optional[str]) – alias name

Inheritance

- actions(context: data_pb2.Context) → data_pb2.Actions[source]¶

Actions of the learner

- Parameters

context – contextual information about the bandit environment

- Returns

actions to take

- class banditpylib.learners.mab_learner.UCBV(arm_num: int, b: float = 1.0, name: Optional[str] = None)[source]¶

UCBV policy [AMSzepesvari09]

At time \(t\), play arm

\[\mathrm{argmax}_{i \in \{0, \dots, N-1\}} \left\{ \bar{\mu}_i(t) + \sqrt{ \frac{ 2 \bar{V}_i(t) \ln(t) }{T_i(t)} }+ \frac{ b \ln(t) }{T_i(t)} \right\}\]- Parameters

arm_num (int) – number of arms

b (float) – upper bound of rewards

name (Optional[str]) – alias name

Note

Reward has to be bounded within \([0, b]\).

Inheritance

- actions(context: data_pb2.Context) → data_pb2.Actions[source]¶

Actions of the learner

- Parameters

context – contextual information about the bandit environment

- Returns

actions to take

- class banditpylib.learners.mab_learner.MOSS(arm_num: int, horizon: int, name: Optional[str] = None)[source]¶

MOSS policy [AB09]

At time \(t\), play arm

\[\mathrm{argmax}_{i \in \{0, \dots, N-1\}} \left\{ \bar{\mu}_i(t) + \sqrt{\frac{\mathrm{max}(\ln( \frac{T}{N T_i(t)} ), 0 ) }{T_i(t)} } \right\}\]- Parameters

arm_num (int) – number of arms

horizon (int) – total number of time steps

name (Optional[str]) – alias name

Note

MOSS uses time horizon in its confidence interval. Reward has to be bounded in [0, 1].

Inheritance

- actions(context: data_pb2.Context) → data_pb2.Actions[source]¶

Actions of the learner

- Parameters

context – contextual information about the bandit environment

- Returns

actions to take

- class banditpylib.learners.mab_learner.EXP3(arm_num: int, gamma: float = 0.01, name: Optional[str] = None)[source]¶

EXP3 policy [ACesaBianchiFS02]

At time \(t\), with probability \(\gamma\), uniformly randomly sample an arm to play. With the remaining probability i.e., \((1 - \gamma)\), sample arm \(i\) to play with sampling weight

\[\begin{split}\left\{ \begin{array} ~w_i^{t-1} & \text{if}~i_{t-1} \neq i \\ w_i^{t-1} \exp\left( \frac{\gamma}{N} \frac{X_i^{t-1}}{p_i^{t-1}} \right) & \text{if}~i_{t-1} = i \\ \end{array} \right.\end{split}\]where \(w_i^{t-1}\) and \(p_i^{t-1}\) denote the weight of arm \(i\) and the probability to pull arm \(i\) at time \((t-1)\) respectively and initially we set \(w_i^0 = 1\) for every arm \(i \in \{0, \dots, N-1\}\).

- Parameters

arm_num (int) – number of arms

gamma (float) – probability to do uniform sampling

name (str) – alias name

Inheritance

- actions(context: data_pb2.Context) → data_pb2.Actions[source]¶

Actions of the learner

- Parameters

context – contextual information about the bandit environment

- Returns

actions to take

- class banditpylib.learners.mab_learner.ExploreThenCommit(arm_num: int, T_prime: int, name: Optional[str] = None)[source]¶

Explore-Then-Commit policy

During the first \(T' \leq T\) time steps (exploration period), play each arm in a round-robin way. Then for the remaining time steps, play the arm with the maximum empirical mean reward within exploration period consistently.

- Parameters

arm_num (int) – number of arms

T_prime (int) – time steps to explore

name (Optional[str]) – alias name

Inheritance

- actions(context: data_pb2.Context) → data_pb2.Actions[source]¶

Actions of the learner

- Parameters

context – contextual information about the bandit environment

- Returns

actions to take

- class banditpylib.learners.mab_learner.Softmax(arm_num: int, gamma: float = 1.0, name: Optional[str] = None)[source]¶

Softmax policy

At time \(t\), sample arm \(i\) to play with sampling weight

\[\exp\left( \bar{\mu}_i(t) / \gamma \right)\]where \(\gamma\) is a parameter to control how much exploration we want.

- Parameters

arm_num (int) – number of arms

gamma (float) – gamma

name (Optional[str]) – alias name

Note

When \(\gamma\) approaches 0, the learner will have an increasing probability to select the arm with the maximum empirical mean rewards. When \(\gamma\) approaches to infinity, the policy of the learner tends to become uniform sampling.

Inheritance

- actions(context: data_pb2.Context) → data_pb2.Actions[source]¶

Actions of the learner

- Parameters

context – contextual information about the bandit environment

- Returns

actions to take