banditpylib.learners.mnl_bandit_learner¶

Classes¶

MNLBanditLearner: Abstract class for learners playing with mnl banditEpsGreedy: Epsilon-Greedy policyThompsonSampling: Thompson sampling policy [AAGZ17]

- class banditpylib.learners.mnl_bandit_learner.MNLBanditLearner(revenues: numpy.ndarray, reward: banditpylib.bandits.mnl_bandit_utils.Reward, card_limit: int, use_local_search: bool, random_neighbors: int, name: Optional[str])[source]¶

Abstract class for learners playing with mnl bandit

Product 0 is reserved for non-purchase. And it is assumed that the preference parameter for non-purchase is 1.

- Parameters

revenues (np.ndarray) – product revenues

reward (Reward) – reward the learner wants to maximize

card_limit (int) – cardinality constraint

use_local_search (bool) – whether to use local search for searching the best assortment

random_neighbors (int) – number of random neighbors to look up if local search is enabled

name (Optional[str]) – alias name

Inheritance

- property card_limit: int¶

Cardinality limit

- property goal: banditpylib.learners.utils.Goal¶

Goal of the learner

- property product_num: int¶

Product numbers (not including product 0)

- property random_neighbors: int¶

Number of random neighbors to look up when local search is enabled

- property revenues: numpy.ndarray¶

Revenues of products (product 0 is included)

- property reward: banditpylib.bandits.mnl_bandit_utils.Reward¶

Reward the learner wants to maximize

- property running_environment: Union[type, List[type]]¶

Type of bandit environment the learner plays with

- property use_local_search: bool¶

Whether local search is enabled

- class banditpylib.learners.mnl_bandit_learner.UCB(revenues: numpy.ndarray, reward: banditpylib.bandits.mnl_bandit_utils.Reward, card_limit: int = inf, use_local_search: bool = False, random_neighbors: int = 10, name: Optional[str] = None)[source]¶

UCB policy [AAGZ19]

- Parameters

revenues (np.ndarray) – product revenues

reward (Reward) – reward the learner wants to maximize

card_limit (int) – cardinality constraint

use_local_search (bool) – whether to use local search for searching the best assortment

random_neighbors (int) – number of random neighbors to look up if local search is enabled

name (Optional[str]) – alias name

Inheritance

- actions(context: data_pb2.Context) → data_pb2.Actions[source]¶

Actions of the learner

- Parameters

context – contextual information about the bandit environment

- Returns

actions to take

- class banditpylib.learners.mnl_bandit_learner.EpsGreedy(revenues: numpy.ndarray, reward: banditpylib.bandits.mnl_bandit_utils.Reward, card_limit: int = inf, use_local_search: bool = False, random_neighbors: int = 10, eps: float = 1.0, name: Optional[str] = None)[source]¶

Epsilon-Greedy policy

With probability \(\frac{\epsilon}{t}\) do uniform sampling and with the remaining probability serve the assortment with the maximum empirical reward.

- Parameters

revenues (np.ndarray) – product revenues

reward (Reward) – reward the learner wants to maximize

card_limit (int) – cardinality constraint

use_local_search (bool) – whether to use local search for searching the best assortment

random_neighbors (int) – number of random neighbors to look up if local search is enabled

eps (float) – epsilon

name (Optional[str]) – alias name

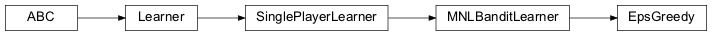

Inheritance

- actions(context: data_pb2.Context) → data_pb2.Actions[source]¶

Actions of the learner

- Parameters

context – contextual information about the bandit environment

- Returns

actions to take

- class banditpylib.learners.mnl_bandit_learner.ThompsonSampling(revenues: numpy.ndarray, horizon: int, reward: banditpylib.bandits.mnl_bandit_utils.Reward, card_limit: int = inf, use_local_search: bool = False, random_neighbors: int = 10, name: Optional[str] = None)[source]¶

Thompson sampling policy [AAGZ17]

- Parameters

revenues (np.ndarray) – product revenues

horizon (int) – total number of time steps

reward (Reward) – reward the learner wants to maximize

card_limit (int) – cardinality constraint

use_local_search (bool) – whether to use local search for searching the best assortment

random_neighbors (int) – number of random neighbors to look up if local search is enabled

name (Optional[str]) – alias name

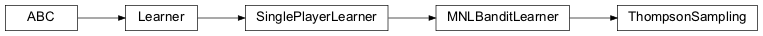

Inheritance

- actions(context: data_pb2.Context) → data_pb2.Actions[source]¶

Actions of the learner

- Parameters

context – contextual information about the bandit environment

- Returns

actions to take